导读

人工智能(AI)技术的迅猛发展正在不断重塑我们的世界。AI的交叉性和学习能力正推动着人类知识结构、决策制度和创新轨迹的深刻转型。

然而,鉴于其颠覆性和尚不明确的技术图景,其发展也有可能会导致极具破坏性的结果,特别是在地缘政治与科技创新紧密相连的当下,这一设想令人担忧。

如何管控人工智能发展,避免其可能造成的恶性结果?

IPP兼职教授,联合国教科文组织国际创意与可持续发展中心咨询委员梅里·马达沙希(Mehri Madarshahi)认为,当前的首要任务,是共同铸造一个兼容并蓄、普遍适用的人工智能治理架构,让国际社会既能释放人工智能潜力、又能妥善管理其风险。

★文章作者:梅里·马达沙希(Mehri Madarshahi)

正文

SHAPING THE FUTURE: EMBRACING DIGITAL TRANSFORMATION FOR ETHICAL GOVERNANCE MOVEMENTS

梅里·马达沙希:拥抱数字化转型,推动科技伦理治理

Artificial Intelligence (AI), once viewed as a mere fantasy has rapidly emerged as the fastest-growing technology with a wide range of applications.

过去人工智能只存在于人类的幻想中,如今已经成为发展最快的技术,在各领域均得到了广泛的应用。

The rise of AI, however, has raises concerns among regulators worldwide prompting discussions about the need for regulation. Issues such as the potential for AI to surpass human control, algorithmic biases, the spread of misinformation, and the misuse of personal data have prompted questions about the need for regulation.

然而,人工智能的崛起引发了全球监管机构的担忧,进而引发了有关人工智能监管话题的思考。人工智能存在的不可控性、算法歧视、虚假信息传播、个人数据滥用等问题,已经让国际社会意识到人工智能监管的必要性。

While AI holds a great promise, its potential misuse carries significant risks to public safety, erosion of social trust, and infringement on individual freedoms.

虽然人工智能的前景不可估量,但是一旦滥用,就会给公共安全带来巨大隐患,侵蚀社会信任乃至侵犯个人自由。

Impacts of Artificial Intelligence. (Elysium Embedded School)链接:https://embeddedschool.in/how-does-artificial-intelligence-impacts-our-lives/

The dangers associated with online misinformation and the possibility of autonomous warfare have further emphasized the necessity for regulation. Nevertheless, regulating AI presents unique challenges as it combines powerful characteristics not observed in previous technologies.人工智能在网络上生成虚假信息的能力及改变战争形态的潜力,进一步印证了人工智能监管的必要性。即便如此,人工智能前所未有的强大能力,迫使人工智能监管面临严峻挑战。

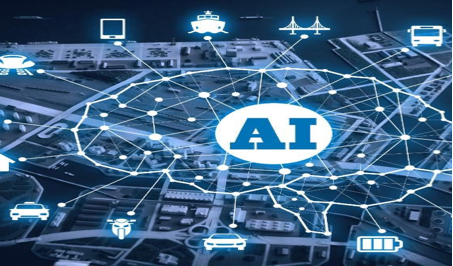

Today, in countries like China and elsewhere attentions is focused on finding the most effective ways to leverage AI’s benefits while mitigating its inherent risks to human advancements and human safty. Unlike incremental improvements in hardware-based technologies, AI systems possess the ability to self-direct, self-replicate, and self-improve beyond human control. Consequently, developing a comprehensive regulatory agenda for AI remains a complex endeavor and a global challenge.今天,包括中国在内的多个国家,正在研究如何在充分运用人工智能潜力的同时,最大限度地减少由此对人类带来的潜在风险。与基于渐进式路径的技术不同,人工智能通常具有自我指导、自我复制、自我改进等特点。因此,制定人工智能的国际法规是一项长期性事业,也是一项全球性任务。

How should regulators address the concerns surrounding the potential scenario where computers could surpass the control of their creators? Should they intervene in algorithms that may introduce bias or distort decisions that impact the lives of billions? Additionally what measures should be taken to mitigate the risks associated with chatbots fueling the spread of online misinformation or the misuse of vast amounts of personal data? Regulators face the urgent task of addressing these critical questions.

监管机构应该如何解决计算机脱离人类控制的担忧?是否应该主动干预存在歧视风险或主动干预对数十亿人产生不利政治影响的算法?应该采取何种措施来降低虚假信息传播、个人数据滥用等与聊天机器人相关的风险?上述问题是监管机构面临的第一要务。

图源:网络

As recent global societal and political coverage have shown, AI could be used to generate and spread toxic misinformation, eroding social trust and democracy. It could also be used to surveil, manipulate, and subdue citizens, undermining individual and collective freedom, or to create powerful digital or physical weapons that threaten human lives. 最近有报道指出,人工智能可以用于制造和传播有害信息,侵蚀社会信任和民主,监视、操纵和限制个人行为,破坏个人和集体自由,甚至生产威胁人类生存的武器。

AI could destroy millions of jobs, worsen existing inequalities, create new ones, entrench discriminatory patterns, and distort decision-making by amplifying bad feedback loops information. Additionally, AI could spark unintended and uncontrollable military escalations that lead to wars.与此同时,人工智能可能会导致数以百万计的就业机会流失,加剧现有的不平等,创造新的不平等,产生更严重的算法歧视,以及通过放大负面信息来扭曲政治决策。更重要的是,人工智能可能会引发无法预料、无法控制的军事升级,甚至可能引发战争。

Questions and doubts are not limited to these. The technology is moving so fast, and the potential risks are, in some cases, so poorly understood, that there is for now, little agreement on an effective regulatory agenda worldwide.上述风险只是冰山一角。由于人工智能发展速度过快,故在某些情况下,我们对其潜在风险知之甚少,以至于迄今为止,世界各国尚未就人工智能监管达成共识。

The recent advancements in Large Language Model-based systems (LLMs) such as ChatGPT have generated immense excitement for the advancement possibilities but they have also triggered renewed concerns about job displacement and the potential decline of human labor demand in a wide variety of occupations, from software developers to screenwriters. A recent study conducted by researchers at OpenAI and University of Pennsivania highlights the extensive exposure of white-collar jobs to automation driven by LLMs and related tools.

大型语言模型(例如ChatGPT)的突破性进展,为人工智能的发展注入了强劲动力,但同时也引发了职业替代和劳动力需求下降的担忧。人工智能研究公司(OpenAI)与宾夕法尼亚大学最近合作进行的一项研究指出,大型语言模型及其他先进人工智能模型的问世,将对白领阶层造成广泛影响。

Online misinformation is an obvious short-term threat,and an autonomous warfare seems plausible in the medium term. Farther out on the horizon lurks the promise of artificial general intelligence, the still uncertain point where AI exceeds human performance at any given task, and the (admittedly speculative) peril that Artificial general intelligence could become self-directed, self-replicating, and self-improving,… beyond human control.从短期来看,人工智能将具备在网络上传播虚假信息的能力;从中期来看,人工智能将具备操纵战争的能力。在不久的将来,通用人工智能将成为现实,即一种能够在各种任务中表现出超越人类智能的计算机系统,且具备自我指导、自我复制、自我改进等超出人类控制的能力。

AI is not the first technology with some of these potent characteristics, but it is the first to combine them all. AI systems are not like cars or airplanes, which are built on hardware amenable to incremental improvements and whose most costly failures come in the form of individual accidents. They are not like chemical or nuclear weapons, which are difficult and expensive to develop, store, let alone secretly share or deploy.

人工智能并非第一个具有上述特征的技术,而是一个高新技术的整合体。人工智能不同于互不关联、依赖渐进式创新的硬件技术(例如汽车、飞机等),也不同于制造成本高昂、维护困难、应用复杂的化学或核武器。

图源:Dawson college 作者:Michel Fournier-Simard

As their enormous benefits become self-evident, AI systems will only grow bigger, better, cheaper, and more ubiquitous. They will even become capable of self-autonomy, able to achieve concrete goals with minimal human oversight, and potentially of self-improvement.随着人工智能优势的凸显,人工智能将变得更加智能、高效、便宜和普及,并具备一定的自主性(例如能够在有限的人为监督下完成特定任务),以及自我改进的能力。

Any one of these features could challenge traditional governance models and render them hopelessly inadequate. This all means that, at least for the next few years, AI’s trajectory will be largely determined by the decisions of a handful of private businesses, regardless of what policymakers in Brussels or Washington do. In other words, technologists, not policymakers or bureaucrats, will exercise authority over a force that could profoundly alter the power of nation-states and how they relate to each other. That makes the challenge of governing AI unlike anything governments have faced before, more delicate, and higher stakes.

然而,一旦人工智能的能力过于强大,就可能会对传统的治理模式构成挑战。这意味着,在未来几年里,人工智能的发展将主要由少数企业主导,而并非政府机构。换言之,人工智能研究人员可能将获得深刻改变国家权力及国际关系的能力。这其中涉及的微妙性和风险性,使得人工智能的挑战不同于世界以往面临的任何挑战。

Governments are already behind the curve. Most proposals for governing AI comprise rules hashed out by political leaders and bureaucrats sitting around a table with little knowledge of the flood engulfing our global community.

政府机构在人工智能治理方面取得的进展乏善可陈。大多数有关人工智能治理的政策建议,均是由缺乏对人工智能有深刻理解的政策专家提出的。

Attempts to regulate AI

人工智能监管在世界各国的进展情况

The rules create new requirements for how algorithms are built and deployed, and how source of information is used. Those measures are laying the intellectual and bureaucratic groundwork for a comprehensive national AI law that China will likely release in the years ahead, that is considered a potentially momentous development for global AI governance on the scale of the European Union’s pending AI Act. Together, these moves are turning China into a laboratory for experiments in governing perhaps the most impactful technology of this era.

一些国家对如何构建和使用算法和信息源提出了新的要求,这对于全球人工治理具有潜在的重大意义,其意义不亚于欧盟的《人工智能法案》。这很可能将成为未来中国综合性人工智能法律的知识和政治基础,正在推动中国成为人工智能治理的“试验田”。

By rolling out some of the world’s first binding national regulations on artificial intelligence China has taken significant steps in regulating AI and other emerging technologies. The Chinese government recognized early on, the immense potential of AI, while also acknowledging the need for responsible and ethical use.

中国发布的全球首部关于生成式人工智能的法规,标志着中国在新兴技术监管领域迈出了重要一步。中国之所以能够取得如此成就,其主要原因是因为中国早就认识到人工智能蕴含的巨大潜力及以负责任、符合道德规范的方式使用人工智能的必要性。China has recognized the immense opportunities presented by the digital economy and has taken significant steps to capitalize on them. in August 2023 China, implemented comprehensive national regulations on AI and established the Cybersecurity Law to safeguard individuals and organizations in cyberspace.认识到数字经济带来的重大机遇,中国采取了一系列积极措施。例如,2023年,中国推出了《生成式人工智能服务管理暂行办法》(以下简称为《暂行办法》),并进一步完善了其网络安全法,以便更好地保护个人和组织的网络安全。

To foster innovation and collaboration, China has created Ai-specific development zones and initiated pilot projects. The country has set ambitious goals for the future, with a vision to increase the output of core industries in the digital economy to contribute 10 per cent of the country’s GDP, by 2025. Additionally, China aims to connect a larger number of households to high-speed broadband, with a target of at least 1 gigabyte per second.中国为了促进科技创新与合作,设立了人工智能研发基地和人工智能试点项目,并设定了一系列宏大的目标,其中包括在2025年之前将核心数字经济产业的份额提高到中国国内生产总值的10%。此外,中国的另一个目标是让更多的家庭获得每秒至少1GB的宽带。

China will also enhance its basic research capabilities in “strategic areas” such as sensors, quantum information, communications, integrated circuits, key software, big data, AI, blockchain and new materials. The country plans to attain self-sufficiency in basic hardware and software, core electronic components, key basic materials, and production equipment to enhance supply chain security in key industries such as 5G, integrated circuits, new energy vehicles, AI and the industrial internet.中国将提升在传感器、量子信息、通信、集成电路、关键软件、大数据、人工智能、区块链、新材料等“战略领域”的基础研究能力,并在基础软硬件、核心电子元器件、关键基础材料、生产设备等方面实现自给自足,以此加强5G、集成电路、新能源汽车、人工智能、工业互联网等重点领域的供应安全。

The digital economy plan aims at, increasing the size of the software and information technology service industry from 8.2 trillion RMB (US$1.3 billion) currently, to 14 trillion RMB by 2025, and boost digital trade from 37.2 trillion RMB to 46 trillion RMB over the same period.

中国《“十四五”数字经济规划》的目标是,在2025年之前将软件和信息技术服务业规模从目前的8.2万亿元人民币(约合13亿美元)提高到14万亿元人民币,数字贸易从37.2万亿元人民币提高到46万亿元人民币。

These initiatives create a supportive environment for AI companies and researchers, encouraging the growth of the industry while ensuring compliance with regulations. The new “Interim Measures” represent the latest addition to China’s evolving regulatory framework on AI, with the intention to establish a comprehensive governance model. By these the Chinese government demonstrate the intention to shape its own governance model.中国采取的多项措施可以为人工智能公司和研究人员营造良好的创新环境,并有助于在确保法规遵从性的同时,鼓励人工智能产业的发展。《暂行办法》是中国人工智能监管框架的一个重要补充,其目的是构建综合性人工智能治理模式。这同时也表明,中国致力于构建自己的人工智能治理模式。

It remains to be seen how China’s approach shapes the development and use of generative AI in the country and whether it serves to encourage the development of this ground-breaking technology.中国将如何规范生成式人工智能的开发和应用,以及相关措施是否有助于鼓励人工智能的发展,仍有待观察。

Numerous countries including the European Union and its 27 Member Countries, the United States, the United Kingdom, Japan, India, UAE, Saudi Arabia and Singapore. have developed dedicated action plans for the digital economy.欧盟(包括27个成员国)、美国、英国、日本、印度、阿联酋、沙特阿拉伯、新加坡等国均制定了数字经济专项行动计划。

The European Union is in the final stage of implementing a ground breaking AI Act , a comprehensive product safety legislation that seeks to regulate the development, trade, and management that aim to regulate the development, trade, and use of AI-driven products and services. This Act focuses on high-risk AI systems, particularly those involved in decisions on job or loan applications. It includes provisions that require companies to disclose the data sources used to create and train their AI models, holding them liable for any misuse. The EU aims to prohibit AI practices that pose unacceptable risks while exempting low-risk systems from specific obligations.目前,欧盟的《人工智能法案》已经进入立法审议阶段。该法案是一项全面的产品安全立法,旨在规范开发、销售和使用与人工智能相关的产品和服务。《人工智能法案》关注的重点是高风险人工智能,尤其是参与工作或贷款申请决策的人工智能。欧盟要求企业披露用于创建和训练人工智能模型的数据源,并对任何滥用行为承担责任。此外,欧盟禁止企业使用不可接受风险的人工智能,同时对低风险人工智能施加适度的限制。

图源:网络

To enforce the AI Act, the European AI Office is to be established and will be responsible for issuing opinions, recommendations, and guidance.The EU Act is anticipated to be fully approved by the end of the year, although some European companies have expressed concerns about its impact on an evolving technology.为了推动《人工智能法案》的落地实施,欧盟将设立“欧洲人工智能办公室”,负责提供政策性意见、建议和指导。该法案预计将在2023年年底前获得通过。即便如此,一些欧洲企业仍担心《人工智能法案》可能会对未来人工智能的发展造成不利影响。

Certain US tech executives perceive the EU ‘s Act as a deliberate protectionist move, slapping limitations on a group of mainly American companies that dominate the AI industry.有观点指出,《人工智能法案》具有明显的贸易保护主义倾向,其根本目的是对美国的人工智能公司施加限制。

IN contrast, the UK has adopted a more principle-based and sectoral approach to AI regulation. Instead of enacting legislation, the UK has outlined five principles (security, transparency, fairness, accountability, and contestability) that UK regulators should consider to best facilitate safe and innovative use of AI within their respective sectors.相比之下,英国并未出台与人工智能相关的法案,而是要求监管机构遵守五项基本原则(安全性和稳健性、透明度和可解释性、公平性、问责制和管理,以及可竞争性),以此促进人工智能在各领域中的创新和安全应用。

The US, has taken a more cautious approach to AI regulation, currently lacking comprehensive federal legislation. Industries are primarily self-regulating, while same state have implemented AI specific laws addressing privacy, security, and anti-discrimination concerns. The US approach to AI regulation is characterized by a wait and see stance.美国在人工智能监管方面采取了较为谨慎的态度,目前尚未进行相关的立法。联邦政府鼓励人工智能产业实行自我监管,部分州政府为了解决个人隐私、数据安全以及算法歧视的问题,已经出台了针对人工智能的法律法规。总而言之,美国在人工智能监管方面目前仍处于观望态度。

图源:网络

Indeed, Tech companies have been actively advocating for AI regulation. In May, hundreds of leading figures in artificial intelligence issued a joint statement emphasizing the potential existential threat posed by these technologies . Subsequently, companies like Microsoft, OpenAI, Google, Amazon and Meta then decided to sign a voluntary commitment at the White House in July. 2023.事实上,科技公司也一直在积极倡导对人工智能进行监管。2023年5月,数百名人工智能专家联合发表了一份声明,警告人工智能对人类构成的潜在生存威胁。2023年7月,在美国白宫压力之下,微软、人工智能研究公司、谷歌、亚马逊、脸谱网等美国头部人工智能公司向社会做出公开承诺,将以负责任的方式开发人工智能。

The United States Office of Management and Budget is currently developing guidance that will establish specific guidance for federal departments and agencies to strengthen AI governance, advance AI procurement, and manage algorithmic risk to safeguard American people’s rights and safety.为了保护美国人民的利益和安全,美国管理和预算局正在为联邦政府下属机构制定政策指导方针,以强化人工智能治理,推进人工智能采购,以及管理算法风险。

Singapore’s Infocomm Media Development Authority (IMDA) has introduced one of the world’s first master-planned digital infrastructure to power the country’s next growth chapter. Singapore’s strategic priorities include investing ahead of demand, and holistically plan for the entire future-ready digital infrastructure stack – hard infrastructure, physical-digital infrastructure, and soft infrastructure.新加坡是世界上首个拥有庞大数字基础设施堆栈总体规划项目的国家之一。新加坡的战略重点包括提前投资及全面规划整个面向未来的数字基础设施堆栈(硬基础设施、物理数字基础设施和软基础设施),可为新加坡未来的经济增长提供动力。

In April 2023, Japan hosted the G7 Digital and Technology Ministers’ Meeting in Takasaki. The subsequent Ministerial Declaration, emphasized the importance of international discussions on the interoperability between different AI governance frameworks . The declaration also highlighted the responsible use of generative AI, addressing disinformation, and safeguarding intellectual property rights.2023年4月,七国集团数字技术部长会议在日本群马县高崎市召开。本届会议部长级宣言草案呼吁国际社会就不同人工智能治理框架之间的互操作性进行讨论,并强调以负责任的方式使用生成式人工智能、处理虚假信息以及维护知识产权。

G7 Hiroshima Summit 2023 Venue (Grand Prince Hotel Hiroshima)

The G7 policymakers prioritized generative AI in Hiroshima and stressed the need for international cooperation with like-minded partners to effectively regulate AI. Other urgent and important issues emphasized by G7 members included privacy and data governance, transparency, fairness and bias, human and fundamental rights, security and robustness of AI systems, and impacts on the functioning of democracy.在日本广岛召开的七国集团峰会上,七国集团领导人就生成式人工智能程序的通用规范交换了意见,并提议与志同道合的国家合作,对人工智能的应用进行监管。此外,七国集团领导人还强调,人工智能公司应该对个人数据和知识产权实施适当的数据输入措施和保护,尊重多样性、公平性、人权等普世价值观,确保人工智能系统的安全性和鲁棒性,不引入破坏民主价值观的程序等指导原则。

Can EU-AI regulation be used as a model?

欧盟人工智能监管框架能否引领世界?

Regulatory efforts to date, are in their infancy and still inadequate. While the EU’s AI Act is regarded the most ambitious attempt at AI governance, but regulatory efforts in this domain are still in their early stages and may struggle to keep pace with the rapid advancements in AI models. Additionally, the EU’s regulations may be more tailored to EU-specific needs.就目前而言,全球在人工智能监管方面尚处于起步阶段,仍存在许多不足之处。虽然欧盟的《人工智能法案》是一项大胆的尝试,但是欧盟对人工智能的监管力度,可能难以跟上人工智能的发展速度。此外,欧盟的法规可能更符合欧盟国家的需求。

In November 2023, the UK is hosting a global AI summit to facilitate discussions on international coordination regarding regulation and how to mitigate AI-related risks.2023年11月,英国主办首届全球人工智能峰会,旨在讨论人工智能监管方面的国际协作及如何降低人工智能的相关风险。

While industry self-regulation is a crucial initial step, there is a need for standard-setting processes, registration and reporting requirements and compliance mechanisms to effectively regulate frontier AI models. These foundational elements combined with industry self-regulation, can help ensure the safe and responsible development and deployment of AI technologies. 虽然行业自律对于人工智能监管至关重要,但是还需要制定监管细则、登记备案和报告制度以及安全标准。上述措施与行业自律相结合,将更有助于确保安全、负责任地管理人工智能的开发和应用。

However, given the present divergence of views and approaches among various stakeholders and considering the absence of confidence building measures, formulating and implementing AI governance solutions may seem challenging.

然而,由于目前利益相关方之间在监管措施上仍存在分歧,而且公众对监管机构普遍缺乏信任,故制定多方认可的人工智能监管方案仍困难重重。

Industry self-regulation must nevertheless, be complemented, by three building blocks for the regulation of frontier models:具体而言,在行业实行自我监管的同时,还必须辅之以三大基本要素:

(1)Standard-setting processes to identify appropriate requirements for frontier AI developers.第一,针对人工智能研发人员,制定合理的监管细则。

(2)Registration and reporting requirements to provide regulators with visibility into frontier AI development processes.第二,制定登记备案和报告制度,以确保人工智能开发过程的可预见性。

(3)Mechanisms to ensure compliance with safety standards for the development and deployment of frontier AI models.第三,为人工智能的开发和应用制定安全标准。

Regulatory sandboxes also can provide controlled environments for testing and developing innovative technologies while ensuring compliance with safety and ethical standards.此外,监管沙箱不仅可以为人工智能的测试和创新提供受控环境,还可以确保其符合安全和道德标准。

AI governance must aim to prevent risks before they materialize rather than mitigate them after the fact. This is especially important because AI could weaken democracy in some countries and make it harder for them to enact regulations.人工智能治理的核心在于防患于未然,并非事后补救,这一点尤其重要。因为人工智能造成的影响可能会削弱民主,阻碍人工智能监管法规的制定。

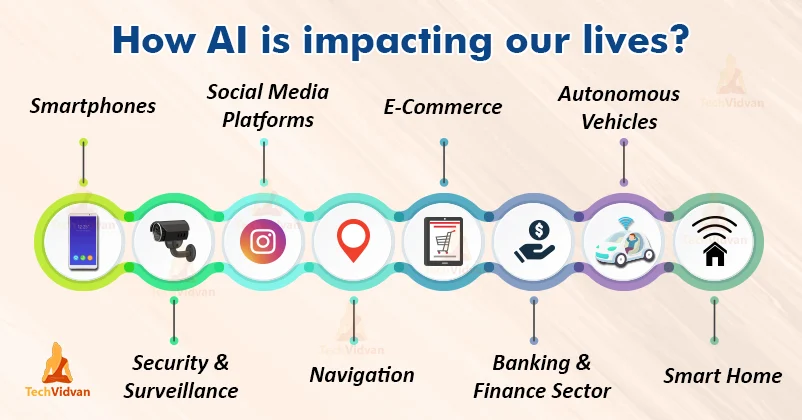

While implementing AI governance solutions may be challenging, it is important to establish a regulatory framework that promotes responsible and ethical AI use.

人工智能治理是一项富有挑战性的任务,其关键在于构建促进负责任、合乎道德地使用人工智能的监管框架。

Industry self-regulation can be an initial step, but it should be complemented by standard-setting processes, registration and reporting requirements, and compliance mechanisms for safety standards. These building blocks can help regulators monitor AI development and deployment, ensuring the technology is used in a manner that aligns with societal values and interests.

推动行业自律是第一步,同时还需要制定监管细则、登记备案和报告制度以及安全标准。这样一来,监管机构便能够有效地监管人工智能的开发和应用,并确保该技术的应用符合社会价值观和人类的共同利益。

It’s worth noting that AI is not the only transformative technology on the horizon. Quantum computing, biotechnology, nanotechnology, and robotics also have the potential to reshape our world. Successfully governing AI can lay the groundwork for governing these technologies as well.

值得注意的是,人工智能并非是当今唯一的变革性技术。量子计算、生物技术、纳米技术和机器人技术也可能像人工智能一样,具有重塑世界的能力。因此,人工智能治理的成败,决定着其他变革性技术治理的成败。

In summary, a tailored and collaborative approach to global AI governance is crucial for creating regulatory frameworks that protect individuals’ rights, promote the most productive aspects of AI, and serve national security interests. By fostering innovation while mitigating risks, we can harness the potential of AI for the benefit of society as a whole.综上所述,具有针对性和协作性的人工智能治理方案,对于构建全球人工智能监管框架至关重要。只有在促进科技创新的同时,降低科技创新带来的风险,我们才可以充分利用人工智能的潜力来保护个人权利,维护国家安全,以及造福社会。

图源:网络

To that effect, national regimes must be aligned with those of other countries so that the technology — which is not limited by borders — can be fully controlled. Otherwise wildly divergent approaches risk tying the global AI industry up in red tape.

为此,世界各国必须尽可能地在国家体制上保持一致,以便更好地控制人工智能的传播。否则,全球人工智能产业可能将陷入繁文缛节的泥潭。

Any attempt for a successful governance of AI must be global and tailored to the specific nature of the technology, its challenges, and the structure and balance of power in which it operates.人工智能治理必须是全球性的,且能够根据技术性质、面临的挑战以及政治结构和权力关系进行调整。

A more reliable regulatory mechanism for governance of AI -at the global level -could probably, comprise a multi-sectoral approach meaning in addition to governments and tech companies, scientists, ethicists, trade unions, civil society organizations, and other voices with knowledge of AI outcomes and stakes in its development should be involved in arrangements and regulations to promote its responsible use.

构建全球人工智能监管框架,可能需要全社会的共同参与。这意味着除了政府和科技公司以外,科学家、伦理学家、工会、民间团体等关注人工智能发展的社会群体,也应该积极参与人工智能监管事业,共同推进人工智能的负责任使用。

The alternative, an uncontained AI, would not just pose unacceptable risks to global stability; it would also be bad for business and run counter to every country’s national interests.不受控制的人工智能,可能会对全球稳定构成不可接受的风险,也不利于商业发展,更不符合世界各国的共同利益。

A comprehensive global regulatory framework should adopt the best and avoid the worst applications of AI. The urgency attached to such regulatory arrangements dictates swift thinking and swift movements by all concerned without undue delay given the speed of technological development and advances.

如何充分利用人工智能的优势及避免人工智能的负面影响,是我们构建全球人工智能监管框架时需要考虑的重要因素。鉴于技术发展和迭代的速度,此事不容迟疑,利益相关方必须立即采取行动。